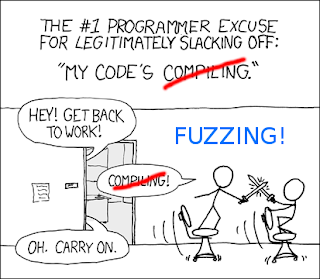

Fuzzing, and … Deep Learning?

Fuzzing, as you know, is a remarkably useful tool from the world of Security Testing. As wikipedia (•) puts it, you provide unexpected or invalid, data as inputs, and then look for exceptions, crashes, memory leaks, etc.

The trick here is to generate inputs that are “valid enough” in that they are not directly rejected by the parser, but do create unexpected behaviors deeper in the program and are “invalid enough” to expose corner cases that have not been properly dealt with.

The trick here is to generate inputs that are “valid enough” in that they are not directly rejected by the parser, but do create unexpected behaviors deeper in the program and are “invalid enough” to expose corner cases that have not been properly dealt with.

The difficulty with fuzzing is that, with limited exceptions, it is hard/impossible to exhaustively explore all possible inputs. As a result, you tend to use heuristics to figure out what to fuzz, when to fuzz, the order to fuzz in, etc., all of which makes it a little bit of a black art.

Herewith a paper by Böttinger et. al. (••) where they use #DeepLearning — specifically reinforcement learning to narrow down the “fuzz space” … Basically, fuzzing is modeled as a feedback driven learning process where the new inputs are determined based on the effects/impacts of the previous inputs based on things like the the number of (unique or not) instructions executed, runtime, etc.

Yes, there is a ways to go here, but the preliminary data on this looks promising!

Yes, there is a ways to go here, but the preliminary data on this looks promising!

(•) Fuzzing — https://en.wikipedia.org/wiki/Fuzzing

(••) Deep Reinforcement Fuzzing — https://arxiv.org/pdf/1801.04589.pdf

(••) Deep Reinforcement Fuzzing — https://arxiv.org/pdf/1801.04589.pdf

Comments